How to detect AI in writing has become a vital skill, especially if you work in the content field. These days, many see AI writing as a shortcut to level up their writing without putting any effort. Whether AI can be detected in writing relies on many factors.

But today, we have brought the most detailed guide to help you detect AI writing, so you won’t have to search around anymore! Let’s start reading now!

Basically, AI writing involves using artificial intelligence (AI) to write particular content. Users begin by inputting a prompt, and then the language models use the extensive data provided to respond effectively to these queries.

Every AI authoring tool is unique, and there is a wide variety available. These tools can assist in planning blogs, creating compelling headlines, and even generating entire blog posts. With the rapid advancement of AI writing, detecting AI-generated content will become increasingly challenging.

That’s when the question “how to detect AI in writing” appears. The answer lies in AI detection, a process to determine whether a document was AI- or human-written.

Related Article:

AI vs Human Writing 2024: Which Writers Is Better For Content?

Can Blackboard Detect AI Writing? The Shocking Truth

AI detection plays an important role, especially in academia or fields requiring accuracy and human experience, something AI writing can’t create.

There are various ways to answer the question: How can AI be detected in writing? Professional writers, researchers, or professors can easily identify AI-generated text with their instincts.

Here, TechDictionary has included the 13 most common signs in AI-generated works. You should combine more than 2 to have the best result.

Today, there are countless AI detectors to help you detect AI-generated works. These detecting tools usually analyze the submitted text’s writing style and tone to find particular patterns indicating AI writing.

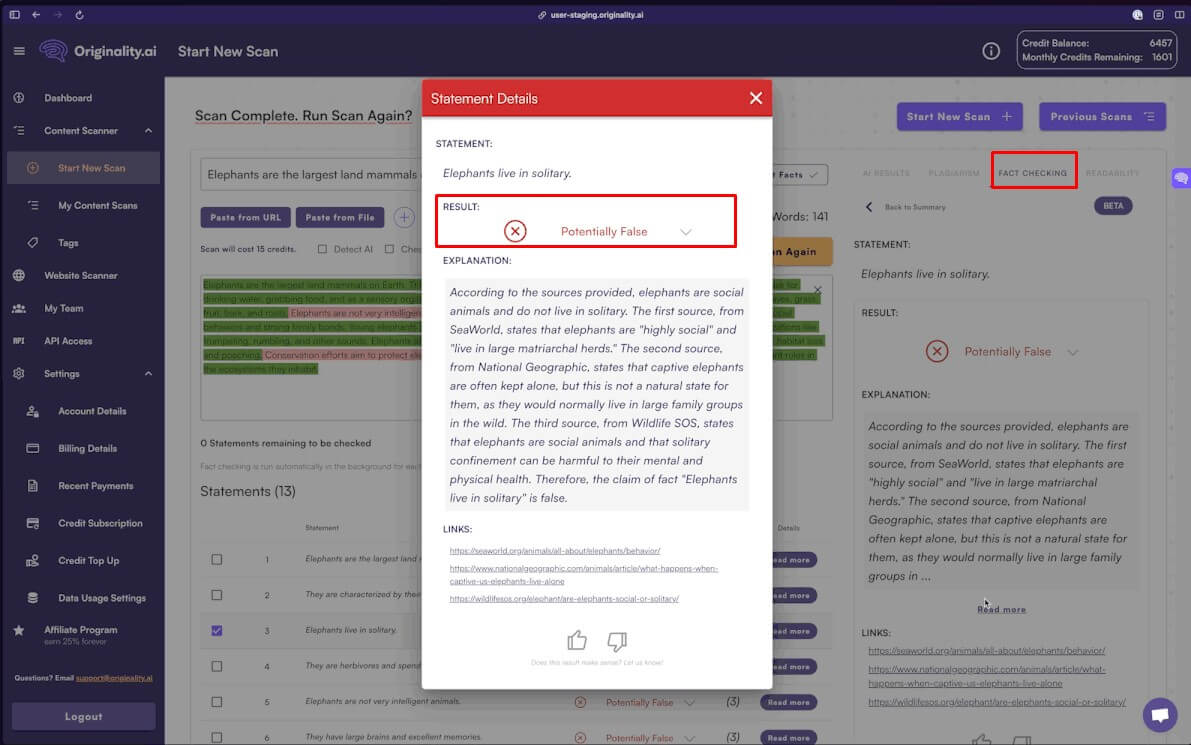

Originality AI – An AI Detector Tool

For example, you can use Originality AI to examine a certain work. This online AI detector will mark incorrect information with a red color, like the statement “elephants live in solitary” in the image. The result also provides an explanation of why Originality AI reached that decision and suggestions for correction.

Are AI detectors accurate? In fact, these AI detector tools aren’t 100% trustworthy, as they can flag human writing as AI texts. Even the Originality AI above also states on the website that there are 2% false positives. So, you should only use them as a supplementary verification method.

Maybe You Are Interested: Can Blackboard Detect AI Writing? The Shocking Truth

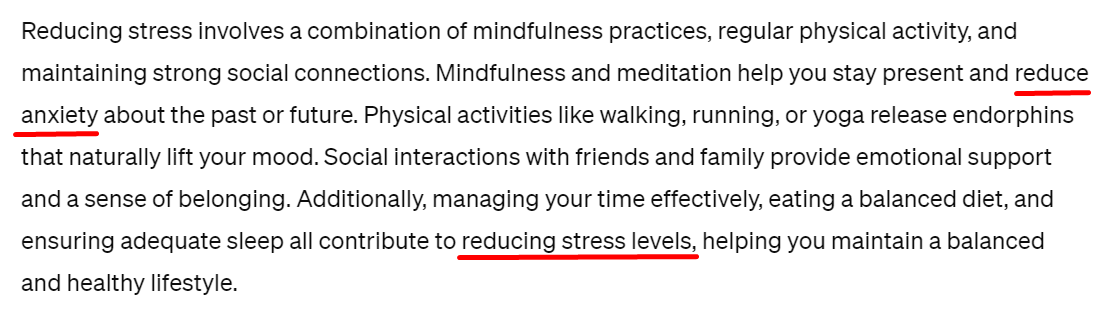

Repetitive words, phrases, or ideas are AI writing’s signature characteristic. That’s because AI models can’t recognize they are using the same words repeatedly. Meanwhile, humans tend to avoid this mistake by using different synonyms.

The clearest proof is when AI models write SEO text based on prompts. These models often include the keyword in prompts in many sentences, leading to keyword stuffing – a bad result for SEO.

Repetitive words in AI

Above is a paragraph generated by ChatGPT-4 with the keyword “reduce stress.” In such a short answer, it uses the word “reduce” three times, making the whole work feel quite repetitive.

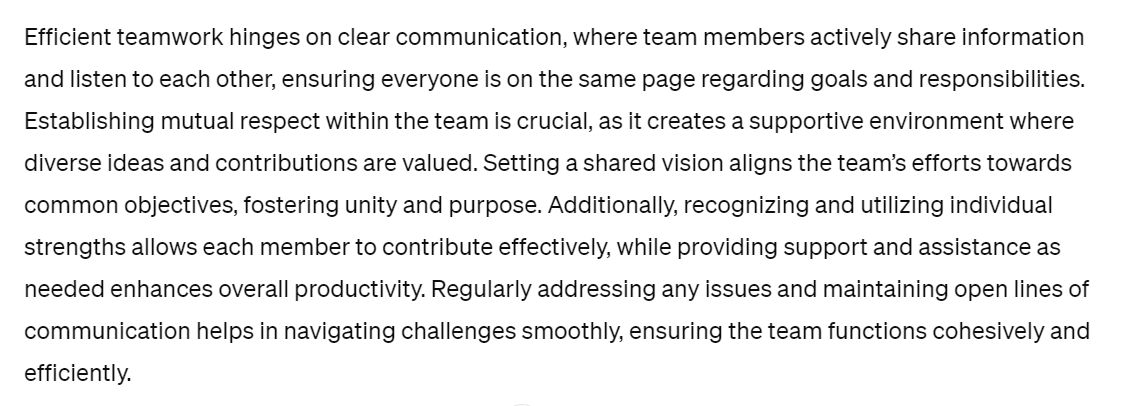

AI language models often use general grammar structures more frequently than humans do, which can make their generated sentences sound monotonous and stiff. One way to identify AI-generated text is by checking if the phrases or sentences seem natural or strangely formal when reading a particular paragraph.

Formulaic sentences produced by AI

Here is how GPT-4 responds to my question about working efficiently: Most sentences use basic grammar, with the subject being one or two verbs in the “-ing” form. Furthermore, ChatGPT-4 consistently adds another phrase after the complete sentence to extend the content, such as “ensuring…” and “fostering…”

AI writing can also be detected using certain familiar words: crucial, delve, dive, tapestry, not only… but also…, etc. A Reddit user even creates a full list of frequently met AI words.

When you read two ChatGPT-4 examples above, you also recognize several words constantly appearing: ensure/ensuring, additionally, reduce, help, etc. Of course, not every work containing these words is automatically AI-written. But if these words show up at an abnormal frequency, the document must have a problem.

Besides these familiar words, AI also loves adding complex and fancy words or phrases to its writing:

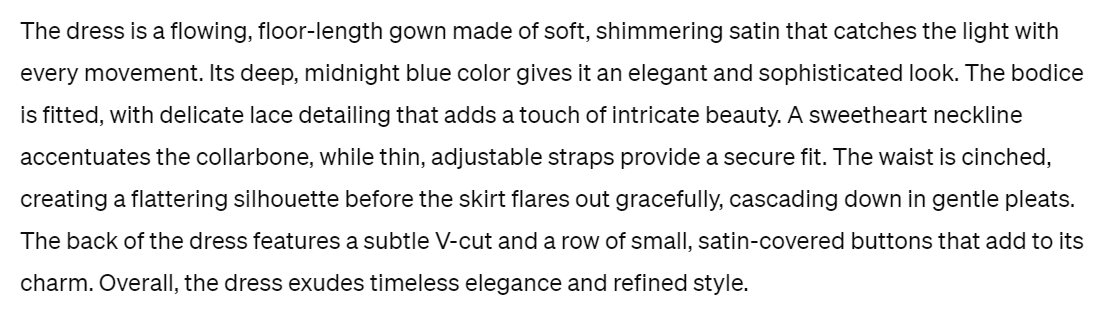

AI models typically use flowery words in the description

“Elegant,” “sophisticated,” “delicate,” “intricate,” “flattering”, and “refined” these over-the-top yet meaningful words that are a big indication to detect AI in writing.

Due to repetitive and formulaic structures, AI’s writing tone and style differ greatly from humans. Humans usually change their styles and tones to match the content.

However, AI writing lacks transition words, informal words, or slang, making it very dull. The unique human writing tone, on the other hand, is replaced with a uniform, robotic tone from the beginning to the end.

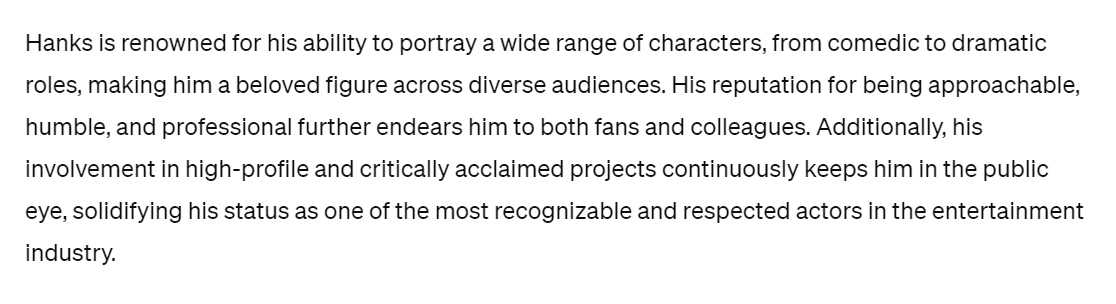

AI writing tends to have a monotonous, formal tone

When asked about a famous actor like Tom Hanks, ChatGPT-4 provides general information in a very formal tone, using phrases like “a beloved figure,” “his reputation for,” and “solidifying his status.” Normal journalists introducing Tom Hanks won’t use such language.

AI models are better suited for processing academic or technical material since their algorithms are designed to compute and recite facts from their training data. Creating content that requires emotions, personal viewpoints, or experiences is challenging for them.

The findings generated by AI are often broad summaries, even when they refer to personal experiences, as they are essentially paraphrases of other authors’ work. In contrast, journalist’s and copywriters’ relatable perspectives and engaging stories offer much better content.

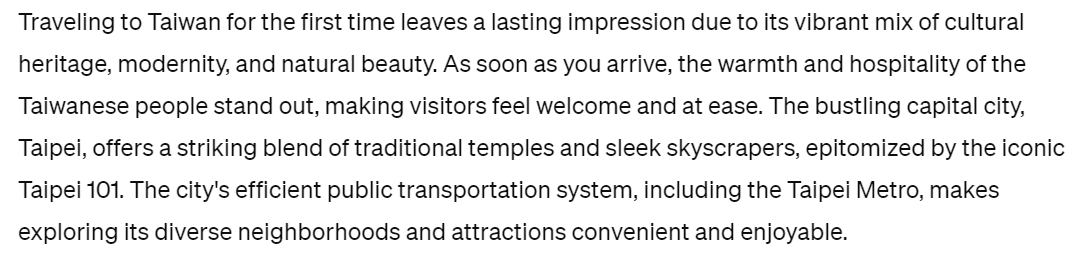

No personal emotion or experience in AI-written text

The article above is an AI model writing about traveling to Taiwan. The whole passage is full of regular information that can apply to any city on Earth. It doesn’t feel like the writer has truly arrived in Taiwan since it lacks the feeling when traveling.

Since AI models lack personal experience, their explanations are often generic and vague without specific information. They also use many filler words to make the text sound more complicated and professional. Still, the result lacks deeper meanings.

In contrast, human writers usually add relevant examples, advice, or arguments to their works, especially if they have personal experience with the topic.

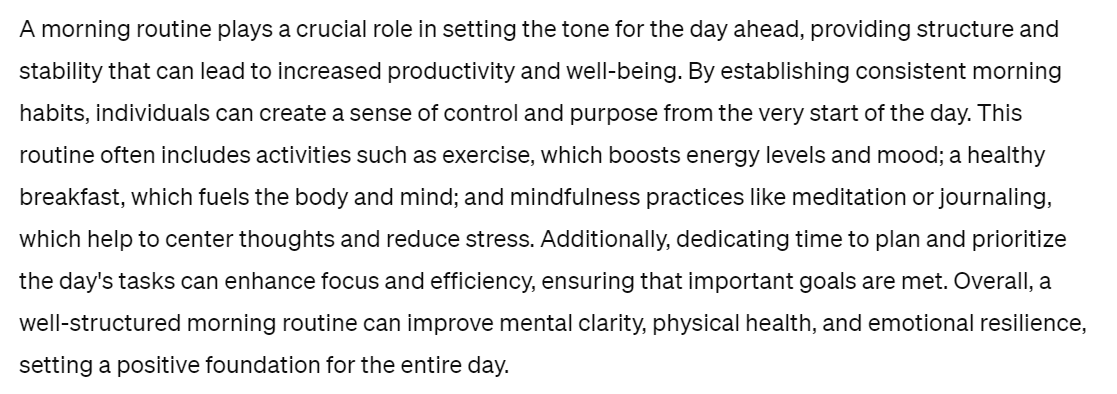

Below is an AI-generated answer when I ask for the role of the morning routine:

The AI-version morning routine is so generic

At first sight, the answer may look sufficient. But when looking closer, you’ll realize it doesn’t provide any significant, detailed information. Which exercise should you do to boost energy? What kind of breakfast should you cook? Or how to practice meditation and journaling?

If you ask the same question to a health professional, they’ll come up with a more satisfactory answer with different plans, so you can choose the most suitable for you.

The results produced by AI models are only strings of words based on their training data. As for whether they really understand what they write, the answer is no.

Therefore, regarding complicated topics, AI answers are often shallow, only touching the surface of that matter. So, if you want to know how to detect AI in writing, see whether the text contains any specialized knowledge or just some facts on any online website.

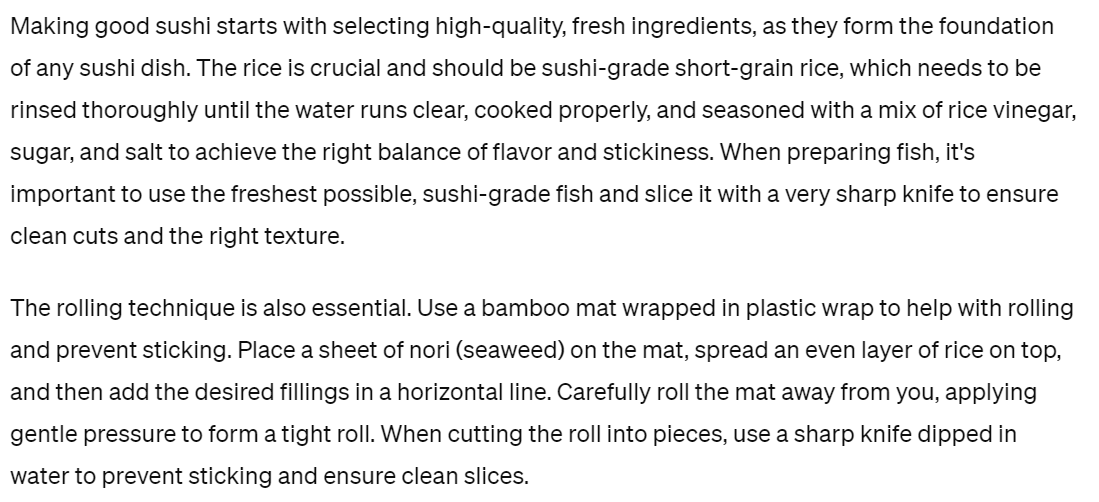

The answer below supports my point:

AI writing can’t touch the subject matter expertise

Making sushi is a sophisticated process that takes years to learn. Here, while AI writing gets the general information true, it completely misses out on the details. You won’t know the proper ratio between rice vinegar, salt, and sugar for the rice or how to choose sushi-grade fish and filet it.

Since AI answers rarely come with information sources, you need to fact-check all of them with reliable sources. There are many cases when AI models provide incorrect information, especially products’ names, attributes, or descriptions. This problem becomes more severe in healthcare or finance fields, where even a tiny error can result in irredeemable consequences.

ChatGPT-4 once made a huge mistake in 2023 by submitting nonexistent cases to an attorney. The judge had pointed out that six AI-generated cases had wrong names and docket numbers, not to mention fake citations and quotes. The attorney using AI had to pay a fine ò $5,000 for this mistake.

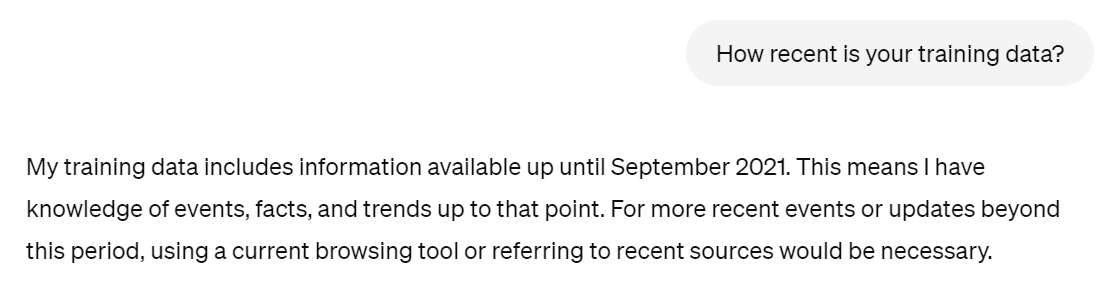

If researchers don’t regularly update the training data, AI models may not have access to the latest information. That makes their content irrelevant or inapplicable to the present.

AI’s data training easily gets outdated

Here is one proof: ChatGPT-4 admits its training data is from September 2021, almost three years ago. Since then, the world has undergone remarkable changes, especially the COVID-19 pandemic. So, there’s a high chance its answer is outdated.

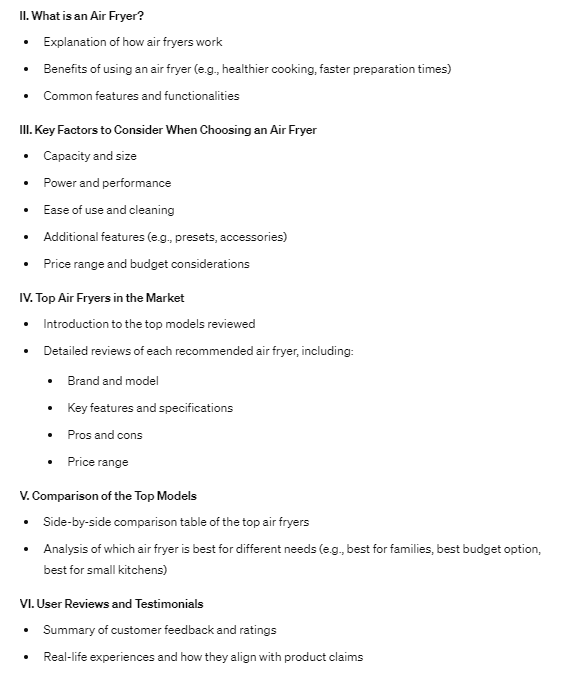

Most online content is created to satisfy users’ demand for specific matters. However, AI models hardly take users’ search intent into account. So, when you want to detect AI in writing, check whether the content aligns with the search intent behind a topic. If it doesn’t, the one behind it is mostly AI.

The AI outline completely misses the search intent

The outline above is for the keyword “best air fryer”. Although some sections contain helpful content, AI writing doesn’t list out any recommended products. If you write according to this outline, your readers will soon look for another website that meets their search intent better.

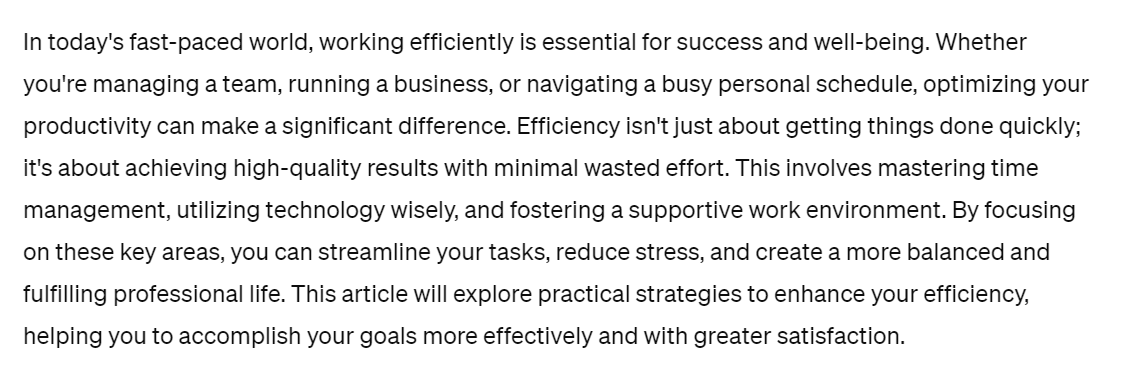

When humans write, they usually make their content progress naturally so readers can understand it more easily. However, AI models don’t understand that, thus struggling to create a coherent piece of content.

AI-generated text often has information jumping from one place to another, making the flow messy and hard to follow.

AI’s storytelling is often unconvincing

As you can see from this AI-written example, the first three sentences share the same idea about the benefit of working efficiently. However, by the end of the passage, the content has changed to practical strategies to enhance efficiency. This abrupt change can confuse readers and make them wonder about the real intent of the work.

As mentioned above, AI writing struggles with personal emotion. And sarcasm is a complex word use that requires high emotional intelligence to understand intentional humor. So, it’s obviously impossible for AI models to understand or generate sarcastic works.

Even if you try, the result often consists of jumbled and weird sentences that completely miss the mark. And when someone tries sarcasm with AI language models, these models may mistake it for sincere sentences.

AI models struggle with sarcasm

AI models struggle with sarcasm

Here is what you get when asking for sarcasm from ChatGPT-4. I must say, “Nice try” on a machine.

Yes, most AI detection tools can now detect AI-generated content. However, no tools are completely accurate due to the false accuracy. Therefore, if your writing makes the same mistake, these AI detectors can falsely accuse your work of being AI-generated.

Below is the statement of some famous AI detectors about their AI detection ability:

Moreover, as AI models keep evolving over time, AI-detecting tools have more difficulty identifying between human writing and AI-generated text.

The battle between AI writing and AI detection may continue for centuries. And it’s never too late to equip yourself with several methods on how to detect AI in writing, whether through AI detectors or other signs like repetitive words or formulaic sentences.

Subscribing to TechDictionary for more informative articles about AI is another great way! Comment below if you have any questions about AI writing and AI detection.