To understand “how do AI detectors work?”, you need to go through different knowledge, including its methods and judging criteria. Sounds difficult? Don’t worry. We’ll explain in a simple way so you can easily understand.

Besides how AI content detectors work, you’ll also discover their reliability rate, roles, and future challenges. Let’s dive right in!

Most AI content detectors work by analyzing the submitted text for particular patterns. Still, different tools will have their own analysis methods, as below:

How AI detectors identify AI content

Besides analyzing the text, AI writing detectors work by relying on these 4 criteria to pinpoint automatically generated works:

1. Burstiness

According to the University of Nicosia, Burstiness is the variation in sentence length, tempo, and structure. In human-written text, the sentence lengths and grammar vary naturally, resulting in high burstiness scores.

In contrast, AI sentences often have an average length (~10 to 20 words) and typical structures. That’s why they feel tedious and have lower burstiness scores.

2. Perplexity

Perplexity refers to how predictable the word choice is or how much the content would confuse the average reader. AI texts often have a low perplexity score compared to human writing since AI models aim to produce easy-to-read works. That’s why AI writing is smoother but also more predictable.

Meanwhile, human writing tends to be more creative with more word choices, leading to more mistakes.

3. Embedding

Some find embedding slightly difficult to understand. So, let’s imagine it as a fingerprint representing the text. AI content detectors use embedding to represent words and phrases as vectors on a high-dimensional map.

This way, these words turn into quantifiable data points based on their meaning. Then, the words with similar meanings are placed close together so the detectors can analyze for patterns not familiar in human writing.

4. Classifiers

Classifiers are ML models that analyze and sort language patterns in the submitted text into predetermined categories. These ML models have vast datasets full of human and machine-written content. And they use that data to distinguish AI writing styles.

Sometimes, classifiers also use unlabeled data. In these cases, they are unsupervised classifiers. However, the unsupervised ones are less accurate than the supervised ones.

In addition to the four criteria mentioned earlier, AI detectors also observe that AI-generated writing tends to have repetitive vocabulary and structure. This is because people often use specific keywords in their prompts to generate AI output, which results in the same words and phrases being used repeatedly throughout the text.

Are AI detectors accurate? Many AI-detecting tools pride themselves as one of the best AI detectors that have an accuracy rate of over 90%, like Turnitin or Originality AI. However, there are no tools that can achieve a total of 100% accuracy.

Some still fail if the AI content is edited or paraphrased after being generated. Others mistake human-written text for AI-generated if the former happens to have low burstiness or perplexity scores.

“The overall accuracy of tools in detecting AI-generated text was only around 28%, with the best tool achieving just 50% accuracy.” – Van Oijen (2023)

Although AI detectors are useful in detecting AI-written texts, it is recommended to use them as references, not conclusive evidence.

AI-detecting models automatically identify harmful or offensive content, like graphic images, hate speech, or violence, and safeguard users from them. This way, online platforms also gain loyalty and trust from users.

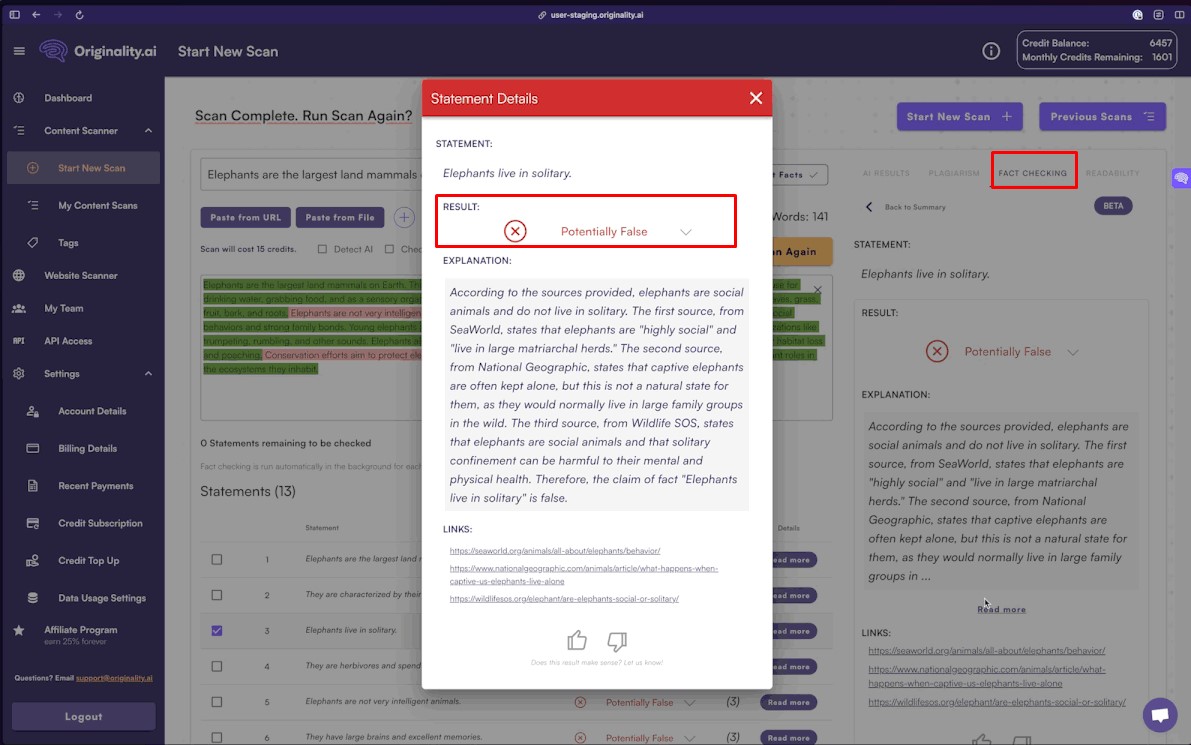

For instance, Originality AI not only checks AI-generated text but also includes a fact-check feature to verify the accuracy of the information.

Originality AI: A Fact Checker Result

AI Detectors Help to Defend Copyright

AI-written content easily violates copyrights or becomes plagiarized if not properly attributed. By flagging these works as inappropriate, AI content detectors protect intellectual property and originality.

That also applies to universities, where students may try to make automatically-generated works into their own. Professions can use AI-detecting models to ensure an honest environment.

Before AI detectors, most moderation processes were manual, which took lots of effort and time and couldn’t keep up with the amount of content produced online. Since AI-detecting tools do all this automatically, the process is quicker and more efficient, with less cost and response time.

Online content is also under the government laws and regulations to protect users and prevent harmful or illegal content from spreading.

According to the US Copyright Office, AI-generated content does not have ownership rights. Additionally, AI mistakes have occurred in the past, such as amplifying racial and gender stereotypes or even causing car crashes. That’s why it’s important to have AI detectors in place to ensure that any content produced adheres to all the relevant laws and regulations.

You may be interested in: What Is The Most Accurate AI Detector? 2025 Picks!

There are many challenges to AI detectors. Here are the 6 most common obstacles and how to overcome them:

False positives are a common mistake in which AI-detecting tools mistake human writing for AI-generated content and mark it as inappropriate. This mistake can cause these tools to unnecessarily remove or block content, affecting user experience or ruining trust.

Different techniques can overcome false positives, such as adjusting detection thresholds, fixing imbalanced datasets, and ensemble learning. Additionally, user feedback or human-in-the-loop validation (where people check if a machine learning model’s predictions are right or wrong at the time) also makes AI detection more accurate and reliable.

AI detectors can be data-biased due to improper training

Many AI-detecting models are trained according to available datasets. If the data’s content lacks diverse perspectives in the real world, it can cause these models to be biased toward certain formulaic writing, resulting in false or unfair predictions for underrepresented groups.

To reduce data bias, developers need to collect more diverse data and develop anti-bias algorithms for training AI detection.

When attackers change incorrect content on purpose to bypass AI detectors, this is called an adversarial attack. Developers can improve AI detectors by doing adversarial training, model ensembling, or input sanitization and making AI models strong enough to fight against these attacks.

Although widely used today, only a few understand how AI content detectors work. This lack of explainability and interpretability makes it hard to trust these models, especially in high-stakes work regarding legal compliance.

To help users understand AI detectors better, developers are developing different techniques, such as attribution methods (letting users score detectors’ attribution), model-agnostic explanations (explaining their predictive response), and post-hoc interpretability techniques (analyzing detectors’ data and thinking patterns).

With the underlying distribution of data changes over time, AI detectors can easily become outdated or even incorrect. Therefore, developers must update or train them regularly with up-to-date data. Monitoring and user feedback also help improve this situation.

Many users have voiced their doubts about privacy and security issues when using AI detectors, particularly regarding their personal information. Therefore, detectors today have included mechanisms for protecting data, like differential privacy, federated learning, and secure multiparty computation.

Plagiarism checkers and AI detectors share a similar purpose: To protect academic honesty and originality. Still, these two are greatly different in their mechanisms and goals, as described in the table below:

| AI Detectors | Plagiarism Checkers | |

| Goal | Find automatically generated content | Find content copied from other sources |

| Mechanism | Examine the submitted work for specific characteristics: perplexity, burstiness, embedding, and classifiers | Compare the submitted work with its database for similarities between keywords, phrases, or content. |

Still, plagiarism checkers can also detect AI content. Some AI writing tools just slightly paraphrase sentences from the original source by substituting words or changing sentence structures from passive to active voice. Though this can bypass some free AI detectors, it can potentially lead to another serious problem: Plagiarism.

At TechDictionary, we always suggest that if you are using any AI writing tools, checking plagiarism is as important as using them. Some AI detectors, like Originality AI, have both features: checking plagiarism and AI-written text.

With a low false positive rate of about 2.5%, Originality AI guarantees that your writing will not be falsely accused.

Overall, the answer to “How do AI detectors work?” is that they work by analyzing the submitted text according to different criteria: perplexity, burstiness, embedding, and classifiers. While they are already reliable tools for various purposes, AI-detecting tools continue to develop and overcome numerous challenges in the future.

Subscribe to TechDictionary for more helpful articles. Comment below if you have questions regarding AI detectors!