Ever found yourself feeling puzzled by AI looking-logical yet nonsensical responses? You’re not alone. These situations are also called “hallucinations” – instances where the AI models generate factually incorrect or irrelevant outputs.

Grounding techniques appear to be the must-have solution to this phenomenon. So, what is grounding and hallucinations in AI? Let’s follow our article to grasp the basics of grounding and hallucinations as well as several tips to reduce the information fabrication in AI.

Grounding in AI involves linking the AI system’s understanding to real-world data, which helps AI produce more accurate and relevant outputs. It’s similar to a fact-checker reducing misinformation generated by AI tools.

What is Grounding in AI?

Grounding is essential for enterprises and other organizations working on niche subjects that have not been included in the initial training for Language Learning Models (LLMs).

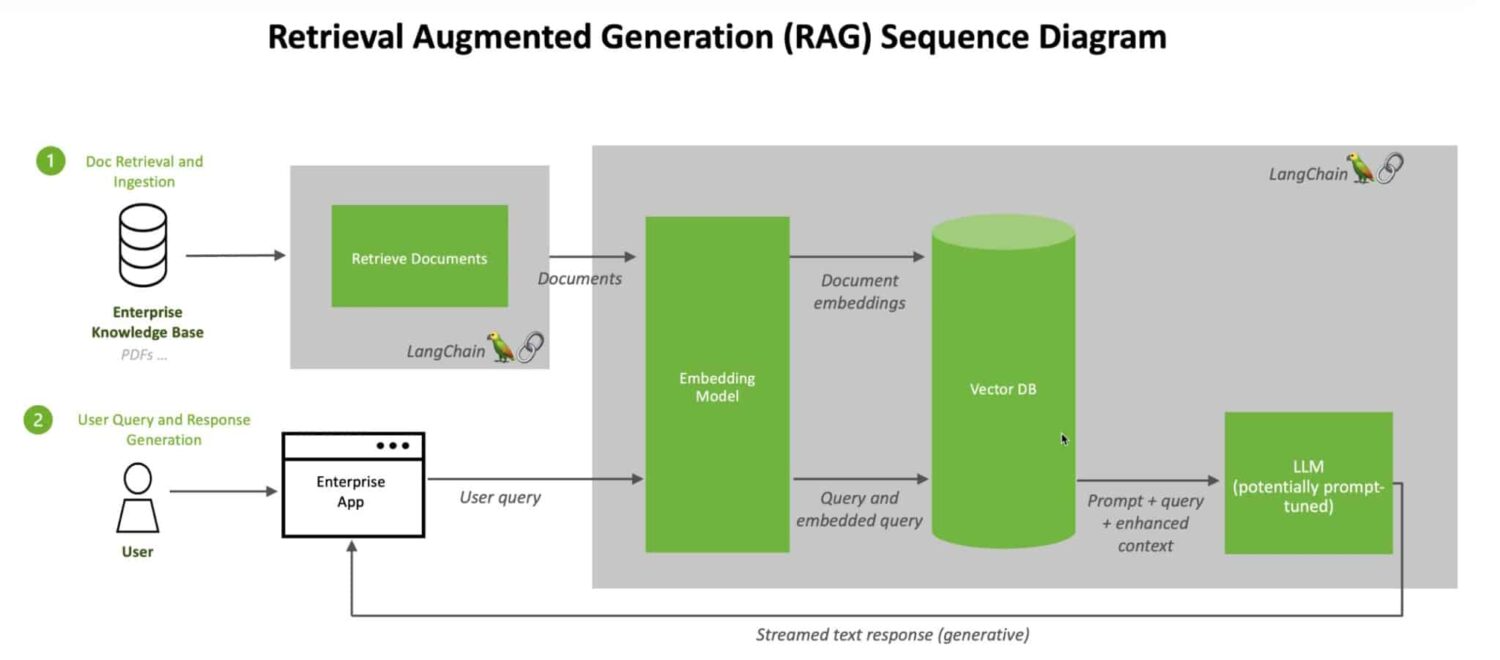

There are multiple AI grounding techniques, and the two most common are fine-tuning comparison and retrieval-augmented generation (RAG).

# Fine-tuning approach

This technique compares AI-generated responses against real-world knowledge from established sources, such as scientific publications or private enterprise data. Mismatches get flagged, prompting the AI to refine its output based on verified facts. This method only works on specific tasks since the AI would struggle with topics outside its training data.

# Retrieval-augmented generation (RAG)

Retrieval-augmented generation (RAG) is a more versatile grounding technique for improving the factual accuracy of AI-generated text. This is because it directly involves real-world data from external sources in the response generation stage.

Retrieval-augmented generation combines LLMs – Source: NVIDIA

Hence, it can check the information and bring more accurate and relevant outputs in many different contexts. Besides, this technique is cost-effective since organizations don’t need to retrain the AI models to ground the systems and generate more meaningful and accurate responses.

Here is a quick understanding of how a RAG grounding tool leverages real-world data and ensures the LLM model produces responses with better accuracy and relevance.

Note: To grasp the selected data, the LLM model requires “knowledge graphing” or “embedding” into numerical vectors.

Imagine a situation where you ask your AI text generator a question, and it delivers an answer that appears logical yet turns out to be nonsensical. Situations like this are AI hallucinations – when AI tools produce incorrect or misleading outputs.

What is hallucination in AI?

AI hallucinations typically stem from the following factors:

For instance, if you ask your AI assistant about a dish recipe it doesn’t know, like the Bun Rieu Cua recipe, it’s likely to grasp related information and fake its answer.

For example, If an LLM was trained on birthday party emails, it can add “happy birthday” even when the customer requested a promotion congratulatory email.

Grounding is like a safety net for an AI system. It ensures that the system responds to users in a relevant, reliable, and accurate manner. Hallucinations occur when there are gaps or deficiencies in an AI system due to improper grounding.

By improving grounding, we can reduce hallucinations in AI models, leading to more trustworthy and accurate AI systems.

To help you grasp a deeper understanding of “What is grounding and hallucinations in AI?” we’ll show two real-world applications of them in this section.

One of the most significant implications of grounding models is to address hallucinations in AI chatbots and develop customized solutions.

For example, off-the-shelf bank chatbot solutions can typically handle general questions about banking products and services. However, they might struggle to accurately address specific inquiries like checking account balances or reporting stolen cards.

Therefore, when a bank desires to create its own customized chatbot, it should include grounding models. These models enable the chatbot to access relevant product details, customer data, and real-time information. With these capabilities, the AI assistant can provide personalized and precise support, ultimately enhancing customer satisfaction.

Grounding and Hallucinations in AI

Hallucinations can also occur in medical diagnoses. An AI system lacking an effective grounding process may misidentify healthy patients as having severe illnesses based on a few similar patterns. This misidentification could result in harmful and costly treatments for patients.

By grounding the AI system with the client’s medical history and medical knowledge, a more comprehensive perspective can be achieved. This approach helps minimize misidentifications and ensures the AI provides more accurate diagnoses.

Here are some practical tips our TechDictionary team uses to prevent AI hallucinations.

After going through our article, we hope you understand “What is grounding and hallucinations in AI?”.

Preventing hallucinations in AI is an ongoing process owing to the complexity and the continuously changing real-world data. Thus, at TechDictionary, we want to remind our readers to implement a combination of grounding strategies to ensure that AI assistants produce relevant and reliable outputs.