Artificial intelligence is a fascinating area of technology that raises many questions, one of which is “Are AI detectors accurate? How reliable are they?”. This topic is of interest to a variety of groups, including technology enthusiasts, privacy advocates, and regulatory bodies, all of whom are concerned with the reliability and ethical implications of AI detection tools. As AI technology advances, it is vital that these detectors are accurate so that they can effectively and reliably identify AI-generated content.

In this article, we will explore the capabilities, challenges, and future prospects of AI detectors, providing readers with a comprehensive overview to help them understand just how dependable these technological tools are.

The Use of AI in Writing

AI Writing Tools

AI writing tools are software programs that use artificial intelligence to help generate text. These tools can be used to write different kinds of material, like blog posts, news articles, marketing copy, and even fiction. The main benefits of AI writing assistants are that they make writing easier by giving you tips on improving your grammar, style, and coherence or by writing whole pieces based on keywords you enter.

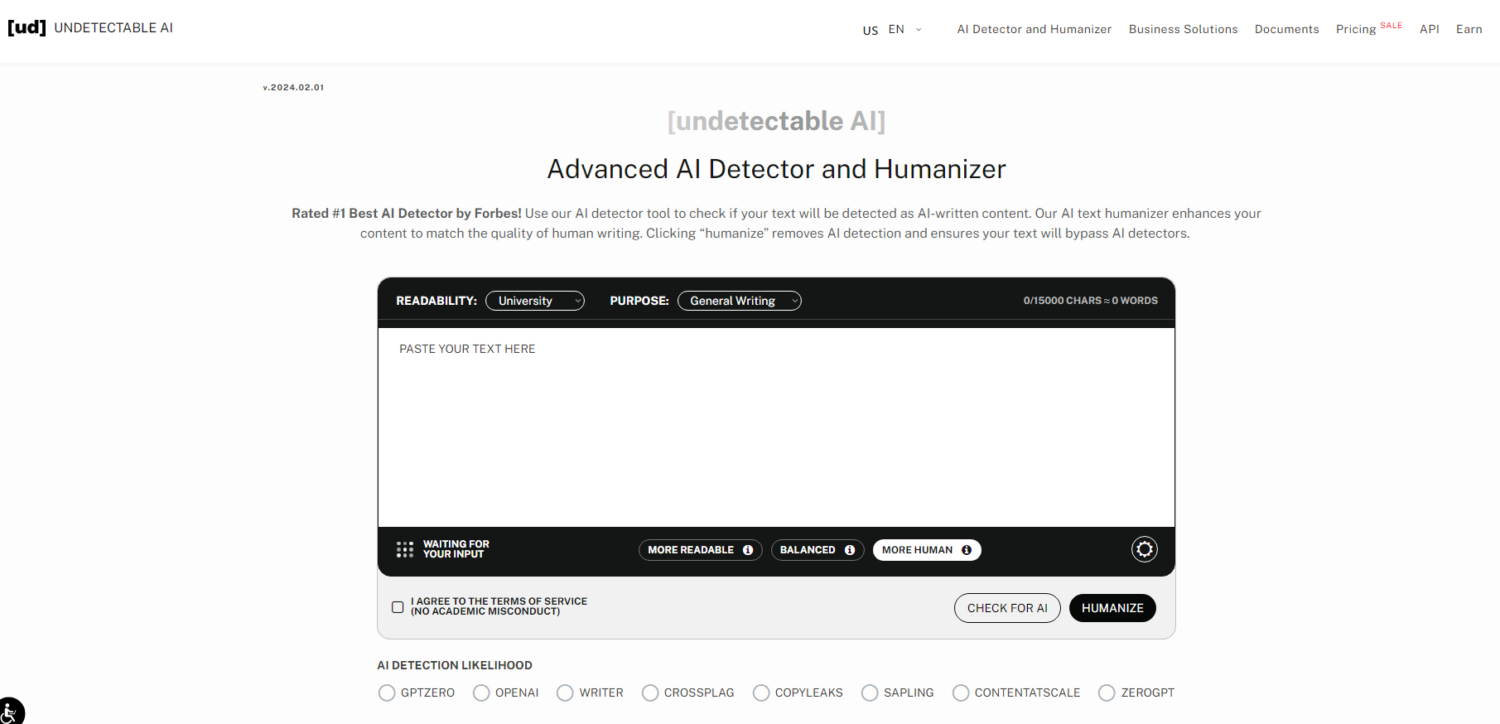

It takes AI tools less time to make a lot of content, but they don’t always ensure quality, relevance, or nuance. Some popular AI writing tools include Undetectable AI, QuillBot, Jasper, and Stealth Writer.

Free Trial - No Credit Card Required

AI Detectors

It is now possible to use artificial intelligence (AI) to detect if a text is generated by another AI. These AI detects tools not only identify content written by AI but also check for plagiarism. Due to the rapid development of AI, these tools are becoming increasingly important in academic and business contexts to ensure the originality of written works.

Some popular AI writing tools include Turnitin, Copyleaks, Originality AI, and Content at Scale. Since text made by AI is becoming more common, the question arises: How do AI detectors work, and are they accurate?

Let’s dive right in:

How Do AI Detectors Work?

First, we need to understand how AI detectors work to determine their reliability. Best AI detectors analyze the text and compare it with a vast database that includes both Human and AI-generated content.

Some methods and criteria that these AI detectors use to identify the AI writing such as:

- Statistical Analysis (Perplexity and burstiness)

- Semantic Analysis

- Stylometric Analysis

- Behavioral Analysis

This explains why there are no AI detectors that can guarantee anywhere close to 100% accuracy, as they always have false positives and false negatives.

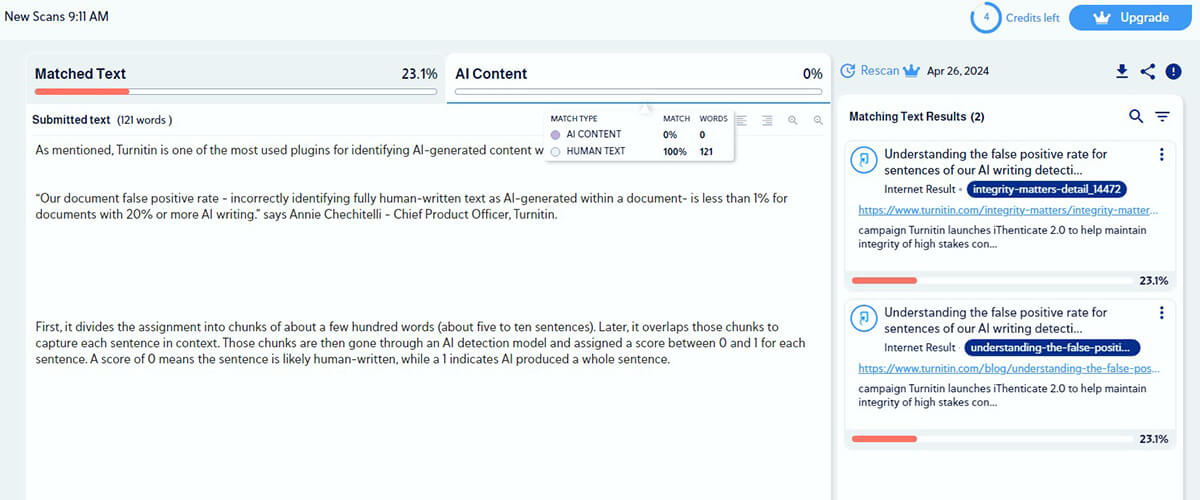

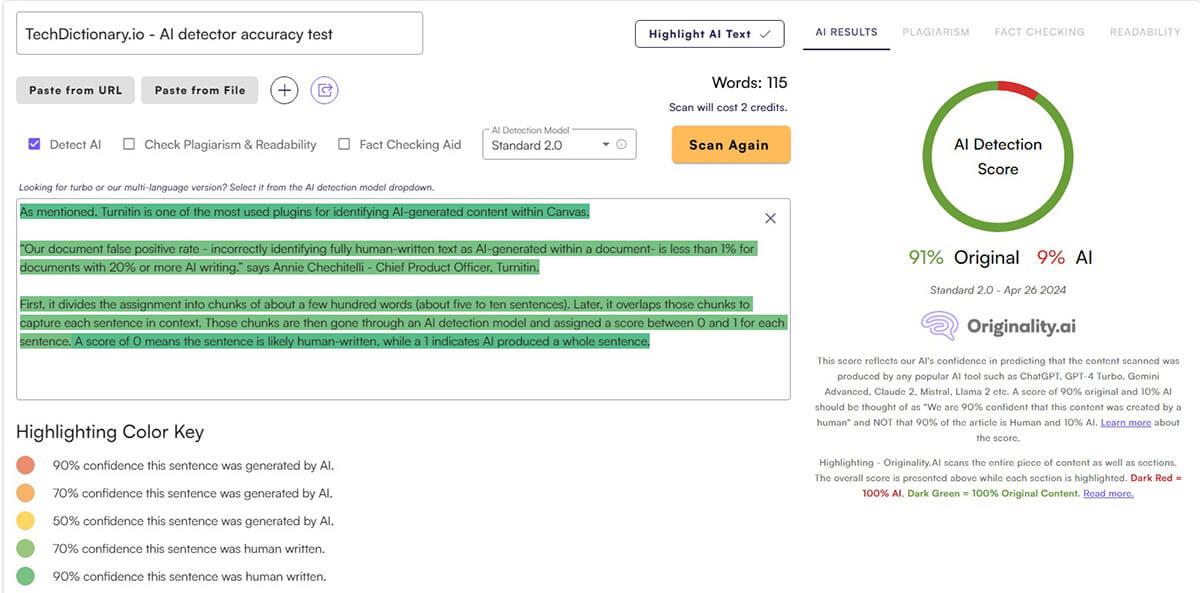

Different AI detectors generate varying types of reports. While some highlight sentences they believe are AI-generated, others provide a percentage likelihood that AI writing tools were used to create the text.

Can AI Detectors Be Wrong?

Yes, AI detectors can definitely be wrong. As we mentioned earlier, an AI detector always has a false positive and a false negative.

Let’s look at this instance. In the Washington Post study, Turnitin correctly identified only 6 out of 16 text samples (including human-written, AI text, or a combination of both) as AI-written. Moreover, Turnitin erred by classifying a text written by a human as AI-generated. The earlier discussion about the false positive sheds light on this issue.

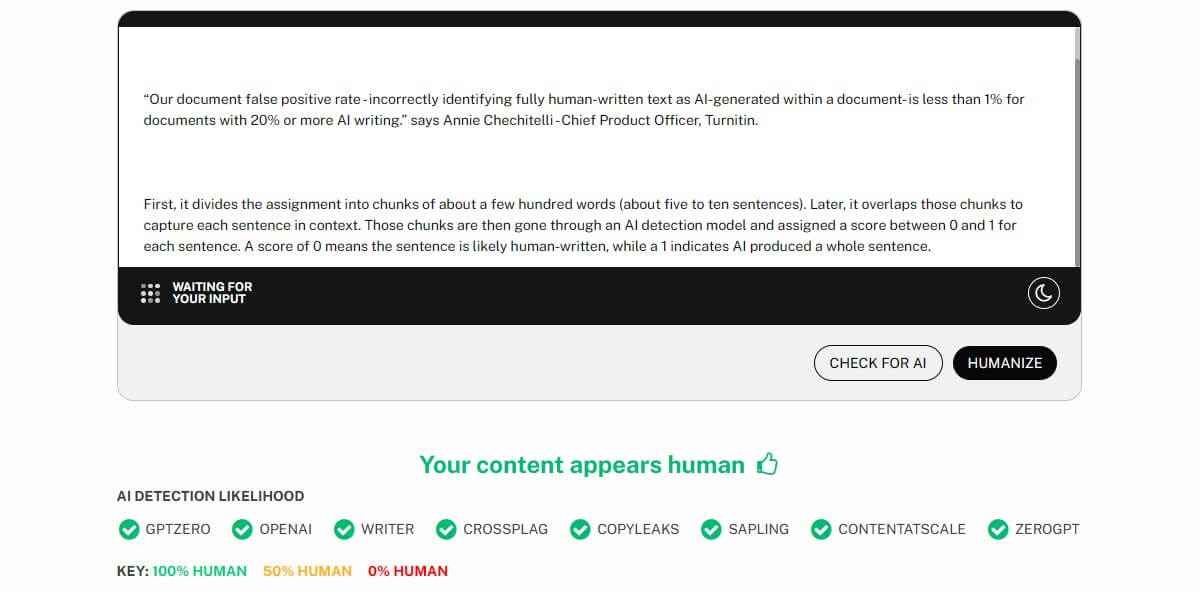

AI paraphrasing tools such as QuillBot, Wordtune, and AI writing tools with humanizing features like StealthWriter can make AI-generated content seem more human-like by imitating the writing style of humans. This leads to a false negative result where the AI detector wrongly identifies the AI-generated content as being written by a human.

Maybe You Are Interested: AI Accountability: Who Is Responsible For AI Mistakes?

Meanwhile, if a piece of human writing happens to contain any of the content signals in the table above, there is a high probability that the AI detectors will mistakenly identify it as AI writing. This is known as a false positive, where the AI detector incorrectly flags human content as AI-generated.

How Reliable Are AI Detectors? Our Experience

What AI Detectors Tell About Themself

A number of AI detector tools, such as Originality AI, Copyleaks, Undetectable AI, and GPTZero, claim to be able to correctly tell users whether a piece of content was made by humans, AI, or a mix of the two.

Let’s see how confident they are when talking about their accuracy.

“We tested over 20k human-written papers, and the rate of false positives was 0.2%, the lowest false positive rate of any platform.” – CopyLeaks

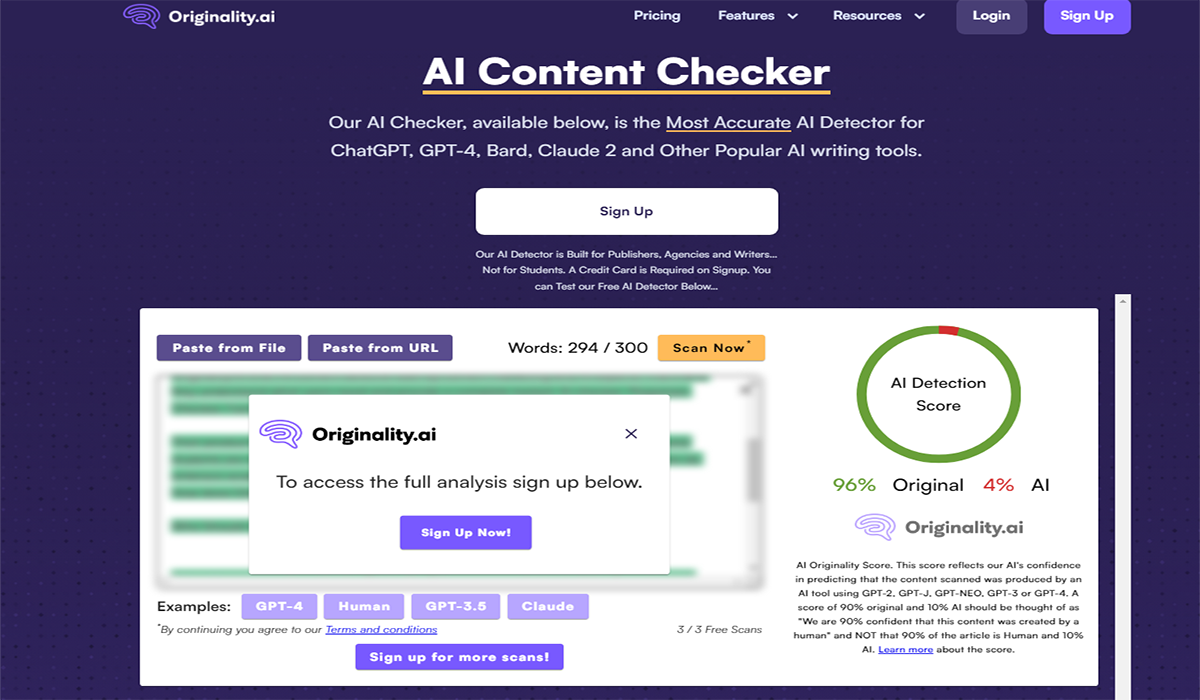

“We tested the trained model on documents generated by artificial intelligence models, including GPT-3, GPT-J, and GPT-NEO (20 thousand data each). Our model successfully identified 94.06% of the text created by GPT-3, 94.14% of text written by GPT-J, and 95.64% of text generated by GPT-Neo.” – Originality AI.

“Third-party tests report accuracy of 85-95%, making it one of the most accurate solutions available in 2024.” – Undetectable AI.

According to Farrokh Habibzadeh’s assignment, “In identifying AI-generated texts, GPTZero had an accuracy of 80%.”

How It Actually When We Tested

After testing all of the statements, we found that none of them seemed to be adding any value. When using AI-generated text, different detectors may yield varying results. Even that happens in the same tool, which is a lot of false positives than their announcement.

We asked three of the best AI detectors to analyze a text consisting of 30% AI-generated content and 70% human-written content. All three detectors identified the text as being written by a human. However, only Originality could show the specific percentage of Human and AI-generated text.

Unlock 25% Savings - Grab It Now

Different AI detectors use various AI algorithms to identify AI-generated writing. As a result, we can interpret the outcomes mentioned above.

However, the issue that annoys us the most is when we run the same content through an AI detector multiple times, and the results are inconsistent.

From our perspective, there is no way on earth that an AI detector has 100% accuracy or even nearly around that. At least, Originality AI agrees with us.

“The world needs reliable AI detection tools, but no AI detection tool is ever going to be 100% perfect.” – Jonathan Gillham, Founder/CEO of Originality AI.

The Importance Of Human Review

At TechDictionary, we always mention that don’t rely too much on any AI writing tool. They are just assistants, not substitutes. From our perspective, all AI-generated content needs to be reviewed and edited by humans. Here are some questions that need to be considered when checking AI writing:

- Is the content helpful for readers?

- Does it make sense?

- Does this reading level fit our audience?

- Does it solve the main problems our readers are having?

- Are the facts correct?

- Are there reliable resources cited?

- Does this sound like a human wrote it?

We believe that AI writing tools are extremely helpful for content creation, particularly for small teams. The key is to control and adjust the AI-generated content as needed.

The Future of AI Content Detection

Many tools advertise their ability to accurately identify human-written from machine-generated content. However, we have not been able to verify that effectively identify the source of both types of writing.

At TechDictionary, we always mention to readers that AI detection should be considered as a reference for AI-written checking but not something you can’t rely 100% on.